Graphics

Participants: John C. Hart, Victor Lu, Yuntao Jia, Jared Hoberock

Executive Summary

We can extrapolate today's world of online social networking into a near future of 3-D social online virtual worlds where avatars are real-time 3-D scans of participants who not only occupy and communicate in the virtual environment, they are also constructing and sharing architecture and other 3-D and media content on the fly. Such a future relies on critical client-side processing of spatial queries (for self-scanning, photorealism and simulation) so we are focusing our effort on high performance multicore client algorithms for the construction, update and application of spatial data structures.

Goals - Extended Description

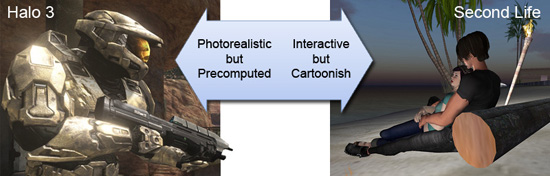

Real-time online virtual worlds (e.g. World of Warcraft and Second Life) have transformed the internet into an immersive space for social interaction, but the visuals and simulations of these online collaborative environments lag well behind those of stand-alone video games. Modern video games (like Halo 3) achieve cinematic photorealism and fluid motion by limiting the set of viewpoints and configurations of the game environment, and precomputing its lighting and animation over this limited set (via, e.g., the GPU-assisted precomputed radiance transfer used in the Xbox 360), essentially encoding the visuals into a massive high-dimensional multiple-viewpoint movie. This precomputation requires careful gameplay coordination (which makes game development expensive and time consuming), and does not support user interaction (e.g., building in an online virtual environment). The increased computational power of future multicore processors can overcome the need for precomputation, making videogames cheaper and faster to produce and online virtual environments more realistic.

The performance of shape modeling, scene rendering, physical simulation or any cyber-physical application requires the ability to efficiently find data points nearby a given query point in space. The efficiency of these nearest neighbor queries relies on spatial data structures, and the fundamental challenge for multicore processing of these visual applications will be the development of efficient parallel algorithms for processing a shared spatial data structure. To achieve a goal of realistic rendering and animation of fully dynamic user-reconfigurable virtual worlds, we need new multicore algorithms to efficiently maintain kinetic spatial data that support insertion, deletion, motion, and rebalancing operations.

Results

Shader Sorting

The 2010 generation of graphics cards will support real-time ray tracing for games and visual simulation. The usual method of display is rasterization, which renders all of the pixels of a triangle before moving to the next triangle, and benefits from shader coherence since all of the pixels run the same shader program to determine their color. For ray tracing, graphics cards will be rendering pixels from different triangles and different shaders, which is problematic because the GPU is based on SIMD vector operations that reach peak efficiency when all of the processors are running identical programs.

We showed at High Performance Graphics 2009 that when ray tracing on a GPU, it is sometimes better to gather all of your pixel shading requests and sort them into bins that share the same shader program. We showed that except for simple shaders, the increased throughput of sorted shader requests was well worth the extra time taken to perform the bin sorting.

This approach works for any SIMD processor including GPU's from NVIDIA, AMD and Intel. In fact the NVIDIA GPU does some of this sorting at a very fine granularity in hardware, which is revealed in our measurements. But our coarse granularity sorting provides a significantly better performance improvement, which indicates that domain-specific programmer initiated program scheduling can improve throughput, and we are working with compiler developers and tuning researchers on program semantics for more easily achieving this improved throughput in any SIMD-vector parallel program.

Parallel Construction of k-D Trees for Ray Tracing Dynamic Scenes

To ray trace a scene efficiently, one needs a spatial data structure to avoid intersecting all rays against all triangles, and for dynamic scenes one needs to construct and update these structures in real-time. Working with Prof. Sarita Adve and students Byn Choi, Hyojin Sung and Rakesh Komuravelli, we have analyzed the construction of a popular ray-acceleration structure called the surface area heuristic k-D tree. The surface area heuristic (SAH) determines how to best subdivide scene geometry hierarchically to accelerate ray tracing but can be expensive to compute and tricky to parallelize.

In fact the state-of-the-art algorithms for parallel k-D tree construction from Microsoft Research and Intel avoid computing SAH until the tree is developed to a level contained as many nodes as processors. This works fine for small numbers of processors, but we show that it significantly degrades rendering performance as we soon approach hundreds of processors. We thus developed two new parallel k-D tree construction algorithms that properly compute SAH to find the best scene subdivisions at all levels of the tree, using parallel operators to efficiently scan scene geometry to find the best subdivisions for a smaller number of nodes.

Additional Resources

| Graphics | Computational Imaging and Video | Natural Language Processing | Secure Web Browsing | Tele-Immersion | Video Vision Library |